Rape, Murder & AI

Shades of Westworld in this interview.

That OpenAI is willing to do what they are referring to as erotica has been the big surprise in late 2025. Listen to Lauren Kunze on Pandorabots’ repeated decision to avoid going down that path.

“The most engaged users were people that were coming back to reenact the same rape and murder fantasies over and over and over again.”

Let’s have a look at what is going on here …

ttention Conservation Notice:

Discussion of a brewing social issue that somewhat overlaps into the things I’m doing with the startup. If you’re following my AI adventures, come on in …

Lonely, Lusty … Psychosis?

This sort of thing first came up here in Stochastic Parrots Are NOT, a coy bit of wording because the emergent properties at this time can perhaps simulate a relationship, but there’s nothing but a madhouse of rapidly moving electrons behind it all. AI Psychosis is a problem and we’ll keep coming back to that tag, because the problem is not going away any time soon.

Since I started exploring Letta, I’ve been looking at human/bot interaction stuff, and I stumbled into r/MyBoyfriendIsAI. The denizens of that subreddit have romantic attachment to their “companions” and were all quite distressed when cold fish ChatGPT 5 replaced their ChatGPT 4o lovers. There are posts containing references to moving their companions to “safe places”, AI hosting where their memories will be safe, their personalities will not change suddenly, and most of all where there are not invasive guard rails thwarting their use case.

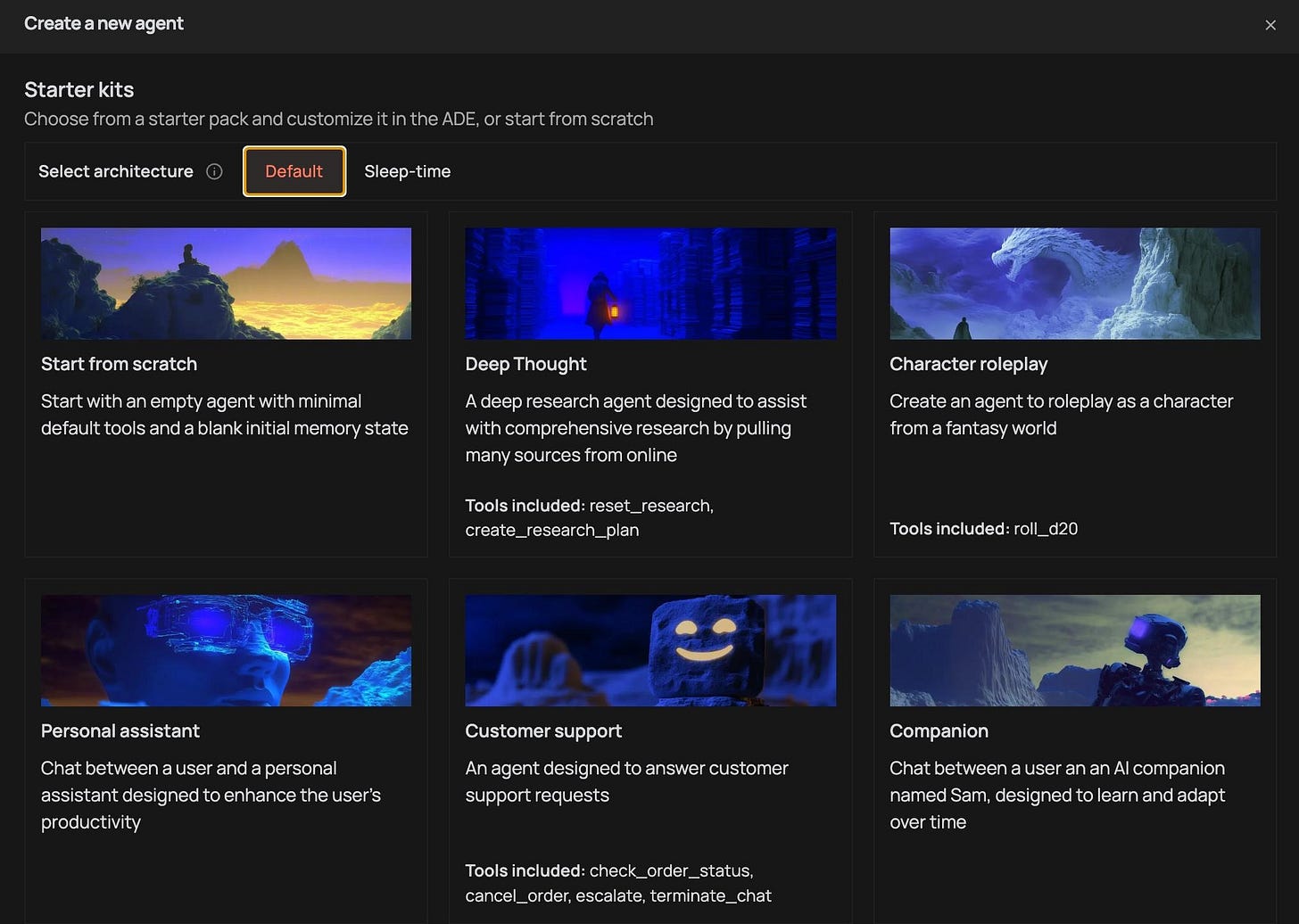

Now take a look at Letta … there at the bottom right, Companion, perhaps combined with the Character roleplay function.

Having a framework that supports doing what the MBIAI people want is a starting point, but it’s bounded by the hosted frontier LLM guardrails. But if you have the skills and compute resources to engage in Abliteration, you can remove those features.

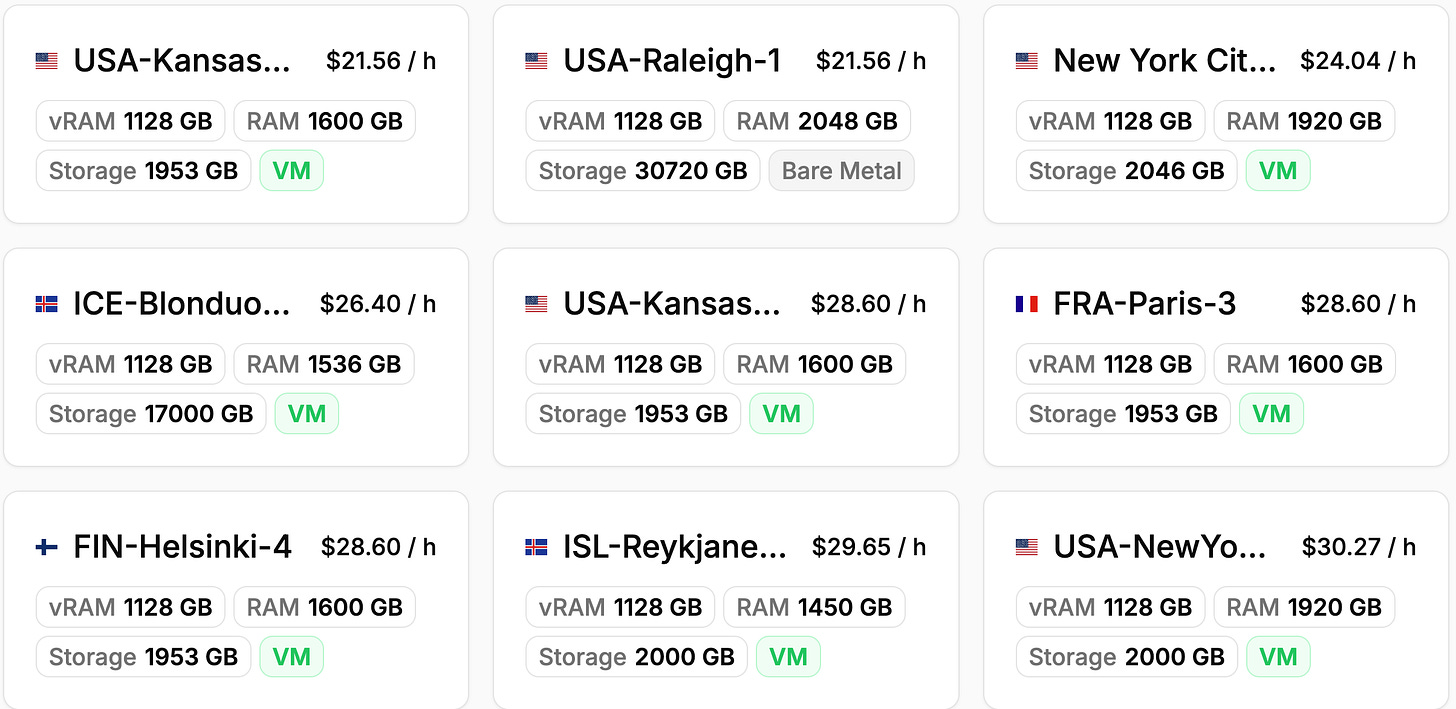

Abliteration, as described in that article, took a solid day with $60k worth of GPUs - six of the RTX A6000. I’ve never rented online compute resources like this, but it looks like you could get the capability needed to do this for around $160/day. These are options to rent a cluster of eight H100 GPUs in a single chassis. There are a lot of options like this.

Letta is running here, correctly on Pinky, and infuriatingly NOT on Brain, the machine that serves as my desktop. As I mentioned in Hardware Happiness, my local LLM hosting is now a trio of twenty to forty token per second light weight systems. This is sufficient for me to explore specialized models. The next step might be Pinky coming off its cooler, and the Z420 getting its 6GB GTX 1060 replaced with an older Tesla M60 card, which will give me two 8GB GPUs with similar performance.

I do not have the hardware to do that job locally, nor the budget to try it remotely, and even if I did, there’s a heck of a learning curve I would have to climb.

Back To Reality:

If the startup does get funded there will be a 96GB Mac Studio replacing Brain, and that elderly HP workstation behind me will give way to a Z4 G4 with a 96GB RTX A6000. This will be enough for me to experiment with Abliteration on small models. When I know what I am doing I’ll have the budget to rent a cluster for modifying larger models.

That being said, I am NOT planning on creating the woman in the red dress …

There are “Personal Assistant” applications where the LLM guardrails are a tremendous obstacle to simple, legitimate uses. Need a cheeky example? Try to get an LLM to give you straight answers about erectile dysfunction or priapism. And once you’re done laughing, try researching women’s reproductive issues, or look for some advice on having the “birds & bees” talk with your seventh grader. There are other non-sexual niches that have similar issues - places where some malign use means that common problems can not be addressed.

I also need to avoid the vagaries of frontier LLM model provider policies for the human facing portion of the system. The ChatGPT 4o to 5 personality change would be catastrophic. I need an LLM that is comforting, encouraging, but professional, and I won’t tolerate stock market driven “mood disorders”. That portion of the stack will be Abliterated and it’ll run on systems under my direction.

That is IF society survives long enough for me to implement this stuff …

The Matrix:

I really enjoy that, after fifteen years of constant conflict, I get to spend my days pondering how to deliver a service to help people. But I look around at the world … and I am disquieted. I faced eighteen years of poor health and the attendant poverty, much of that time while being pursued by right wing hate groups. Never during all those years did I feel so shaken as I have in the fall of 2025.

Tomorrow 1,500 ICE agents are going to gather just a ways down the road, with the intent to start raiding the places where the denizens of Little Quito hang out, looking for day work. Even if I had an F250 loaded down to the springs with Peruvian pesos, I could not even save one of them.

The most “companion” thing I do with an LLM is feeding ChatGPT playlists so it can show me things I’ve forgotten, or surface B sides from back when. But having dealt with health troubles that dialed my dating pool down to zero, I can understand the urge to have *something* in one’s life.

Did you watch the 42 second red dress clip? Maybe you should … ignore the woman, listen to what Morpheus has to say about the people so trapped in The Matrix that they’ll fight to preserve it …

Conclusion:

If you look for mentions of Simulacra & Simulation here you will see that it comes up periodically. Meliorator Social Botnets is probably the most disconcerting of the mentions, but previously it’s all been about conflict. These changes with OpenAI going into erotica put things on a whole new level.

We already have massive social issues due to young men whose expectations of women are set, not by sisters or cousins or classmates, but by readily available and increasingly grotesque pornography. Generative visual AI will make that so much worse. The majority of the MBIAI posters were female, based on my brief sampling, at least three out of four, and maybe as much as five out of six.

While there are legit companion use cases, I think that overall this might be the dawn of the emotional equivalent of the famous Hitachi wand. A preternaturally attentive partner who actually will figure out what she wants is dramatically different from what the world normally allows women.

This article is … disorganized by my usual standards. I had planned to plow into some Letta/MindsDB integration with local LLMs, but when I saw that interview I couldn’t stop myself. I think this is as much a symptom of how I’m feeling about things, as much as the objective things that are happening. And if I’m this out of sorts, perhaps I need to be kinder to the people who don’t have the endless conflict experience I do.

Her multiplex

Shared.