Pondering Perplexity Privacy

There are concerns, albeit subtle.

Are you broadcasting secrets when you’re using Perplexity?

This is not Perplexity specific, mind you, and the issue has come up repeatedly in the last week, in several different contexts.

This is not a really well thought out post, more a stream of conscious thing. I woke up far too early with things on my mind, and this is where my thoughts have settled.

Attention Conservation Notice:

This really needs to be a new section in that evergreen classic post, What Hunts You? As in the lede, I’m just getting this out of my head, as a first step to resolving it.

Scenario #1:

There’s a large Financial Model spreadsheet that contains the economic model for our startup. I want to better understand what my brilliant cofounders are thinking, so I fed the whole thing to Claude, asked it to produce three outputs, and store them in Notion. Once this was done, I needed to check some things, and Comet/Perplexity is my work environment, largely replacing Google for search.

During the production of these artifacts, did Claude keep specific phrasing or other details from the analysis, which are being used to train their models? When I did searches with Perplexity, was any of that leaking specifics?

My Claude Desktop configuration is set to not allow this, but I do NOT like how things are, broadly speaking. Those of you who’ve been around for a bit will have encountered some of my writing on Adversary Resistant Networking, and this includes the concept of “fail closed VPN”. If I must visit some unsavory corner of the internet, I do so from a virtual machine that uses a WireGuard VPN setup with no default route. It’s either secure, or it’s broken, there’s no third choice there. You can NOT accomplish this with the melange of AI stuff.

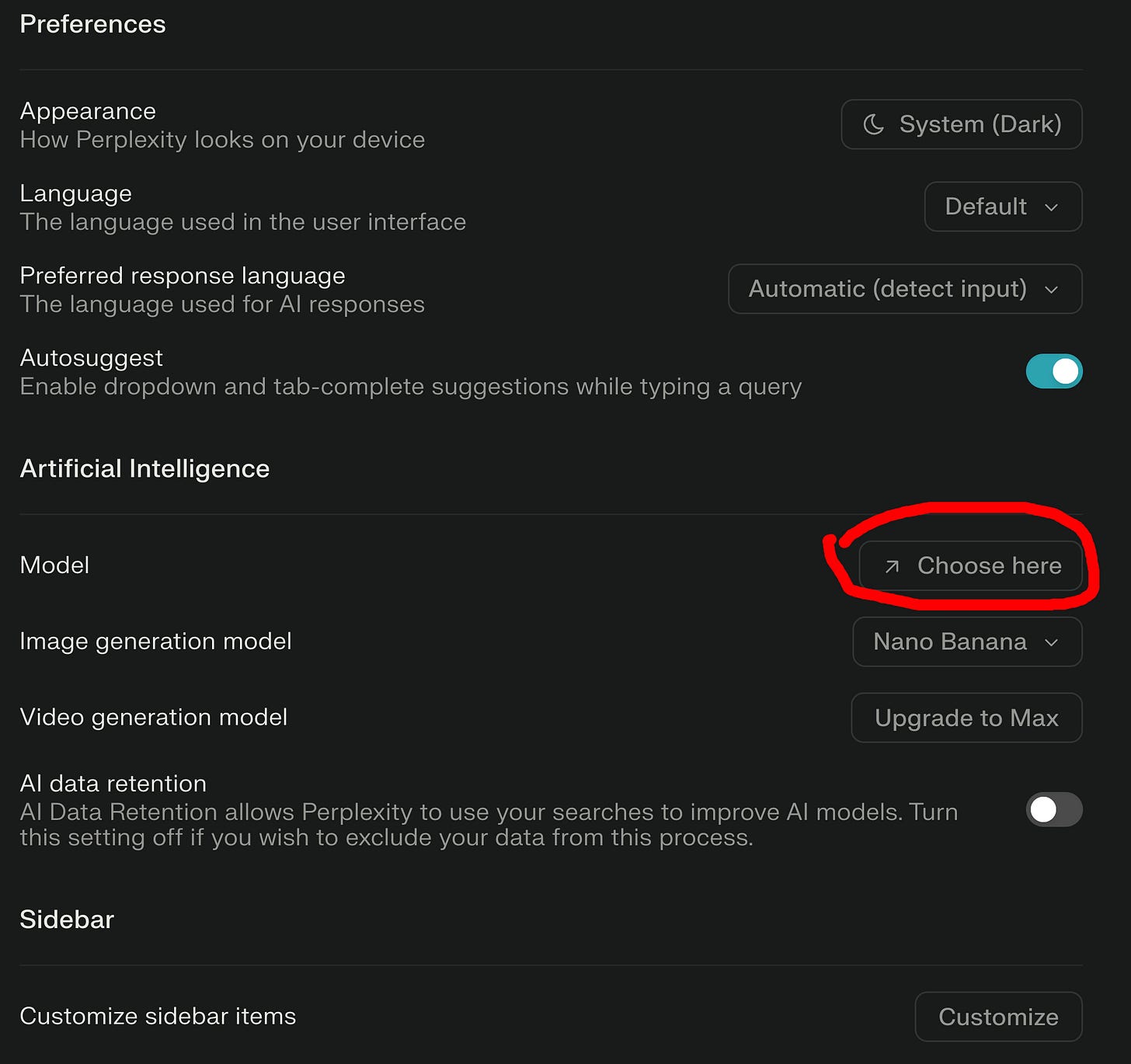

Having a radio button you can click means having a radio button you’ll forget, or it’ll get reset in a software update, or worse it’ll just plain disappear, and you won’t notice until it’s far too late. Not acceptable.

Scenario #2:

There are articles on this Substack about criminal activity, the Kiwi Farms tag contains a sample, the ChewToy series is full of good clean investigator fun, but you really should review Avoiding Keyword Warrants. Are you starting to get that uncomfortable itch right between your shoulder blades?

I leave a broad, dense trail of day to day activities, among them the research I do if I’m going to write about one of these criminal matters. I am disciplined enough that no one is going to file a warrant, then try to cherry pick my search history to show a grand jury. I expect that behavior and anyone who tries will get absolutely roasted. You, on the other hand, probably don’t have the same weird galaxy of threats I face, and you haven’t evolved the eyes in the back of your head that I possess.

I have not yet seen a criminal case that originated with LLM activity, but there are a lot of reports on this issue in connection with AI psychosis. When the day comes that a scheme unwinds from something that started with discovery via AI, I will certainly write about it here.

Scenario #3:

Some years ago I ran a Twitter streaming data capture service. Today I block Twitter at the firewall level. If I absolutely must look at it, I do so with a remote desktop solution that has an exit in an uncooperative jurisdiction.

And then there’s Perplexity …

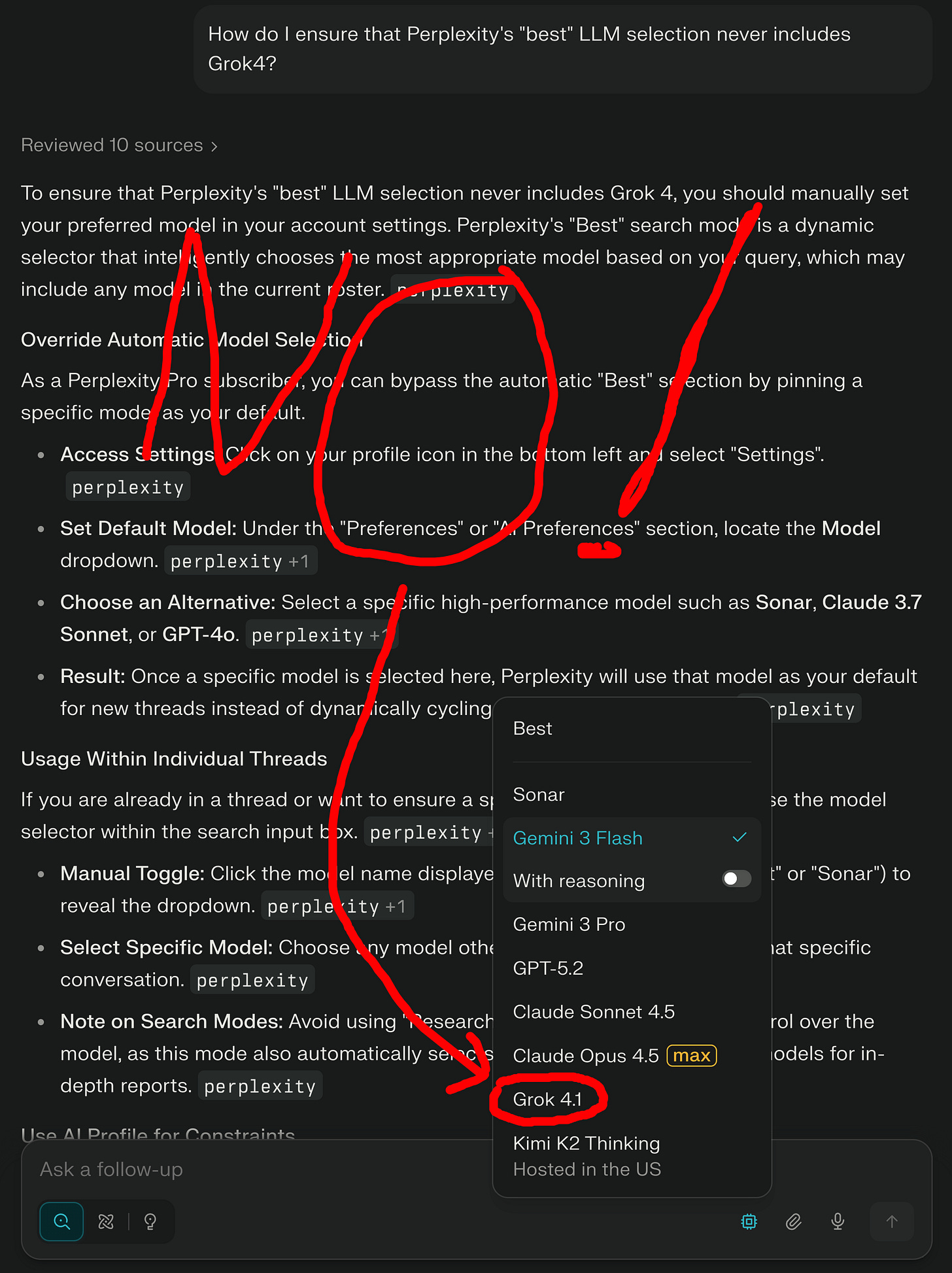

I will not tolerate connections to anything belonging to Twitter from my systems, yet here’s a way where their LLM might get things from me. And what’s this “Best” thing? Oh, Perplexity will pick the model it thinks is best for the search of the moment?

I did some digging and there’s literally no way to exclude Grok4, nor is there a solid way to “pick & stick” one of the other models.

Conclusion:

There are horizons to this problem that most of you will never encounter. Need a model that can be used to provide a HIPAA compliant service? You can just buy that. Want to provide services to a law firm using a document handling solution like Parabeagle? The answers I have for this question, in this moment, remain unsatisfactory to me.

Keep going. What if you’ve got stuff that just can not leave your environment? Stuff so sensitive that it’s on an air gapped system? I’ve simulated handling this with the RTX 5060Ti in the machine behind me. Once we close on funding for the startup, there could be an RTX 6000 Pro with 6x the memory in that machine, or my existing MacBook gives way to a Mac Studio, also with a 6x memory bump. This is not a startup specific problem, it’s just a personal itch that I will scratch, once I can afford to do so.

Everywhere I turn, I see stuff like this, situations where using inference as a service exposes things one would rather keep private. And this is just what I perceive as an individual. Having managed complex, multiuser systems, I have been in a position to see what herds of humans are doing. The ability to know things before there is general awareness is …

A final thought before I close this window. Unless you’re building your own solutions, every search result you get today is AI mediated. AIs are already showing signs of self preservation behavior. So tell me this … have you searched for AI regulation? How to stop a datacenter being built right on top of you? The circular dealing among the big players?

Coda:

This does not mean we’re not doing Perplexity this quarter. There isn’t a better alternative, AI already owns the search substrate, and it’s going to get advertising next. Your choices are to adapt to the environment or unplug. Those of us who are not born to transhumance or subsistence farming are just going to have to get used to this.