Minds & Manners

As if reality TV wasn't bad enough

If you look at the masthead for this Substack you’ll find prominent mention of James “Pissboy” McGibney, a federal informant in Texas who has been harassing me since 2012. He is part of a wave of undesirables that have swamped American culture over the last twenty years - reality TV vermin. The practice of elevating people performatively exercising their character disorders and calling it entertainment actually IS the sort of problem Tipper Gore thought she’d found in lyrics way back when.

And Pissboy … he’s lost eleven years and in excess of a million dollars trying to turn me into the introductory subject of a bullying focused reality TV show. You would think he’d have learned the fundamental differences between a revenge porn operator like Hunter Moore and a political opposition researcher by now, but he recently lost ANOTHER start in that area by trying to involve me. There weren’t any court filings this time, his latest effort just … encountered some gusty crosswinds from an unexpected direction.

And this morning I noticed coverage of another problem that’s been on my mind for a while - the effect chatbots will have on human interaction. Reality TV has desensitized us to behavior that would have rightly earned one a punch in the mouth a generation ago, and now LLMs are directly rewarding bad behavior …

Attention Conservation Notice:

(Mostly) polite Gen-X elder laments kids these days. But there IS an actual problem.

Insulting Cyberservants:

This LiveScience article crossed my feed this morning, putting some numbers on interactions with ChatGPT that match my experiences with Claude.

Being mean to ChatGPT increases its accuracy — but you may end up regretting it, scientists warn

“Our experiments are preliminary and show that the tone can affect the performance measured in terms of the score on the answers to the 50 questions significantly,” the researchers wrote in their paper. “Somewhat surprisingly, our results show that rude tones lead to better results than polite ones.

And then there was this insult to one’s intelligence, which obviously involved some sort of lurking legal counsel.

“While this finding is of scientific interest, we do not advocate for the deployment of hostile or toxic interfaces in realworld applications,” they added. “Using insulting or demeaning language in human-AI interaction could have negative effects on user experience, accessibility, and inclusivity, and may contribute to harmful communication norms. Instead, we frame our results as evidence that LLMs remain sensitive to superficial prompt cues, which can create unintended trade-offs between performance and user well-being.”

I started using Claude in earnest in late June of this year, and it wasn’t more than a week before it began eroding the normally calm, diplomatic demeanor I have in text interactions. If you’ve noticed the AI Psychosis coverage here, you’re aware that some people are not just anthropomorphizing, but actually falling in love, with stochastic parrots. As an autist, a programmer, and a systems administrator, I have ZERO patience for anything other than /dev/urandom behaving in a non-deterministic fashion.

So my interactions involved my employing a lot of “driving words”, before I figured out how to add things like this to the Project Prompts.

DO NOT WASTE TIME AND TOKENS WITH UNREQUESTED SUMMARIES WHEN ADDING DATA FROM MANUAL INPUTS OR URLS.

(Various other orders …)

NO, REALLY, I MEAN IT, DO NOT WASTE TIME AND TOKENS WITH UNREQUESTED SUMMARIES WHEN ADDING DATA FROM MANUAL INPUTS OR URLS.

Humans:

When presented with some excess text about something I find uninteresting, “Hey asshole, STFU” is fine with an LLM. But if I do that a dozen times a day, how long will it be before I reflexively type that with a human?

Sycophantic AIs are a problem. Humans becoming dismissive, rude, or downright hostile are a BIGGER problem when that starts slopping over into the meat world. I imagine this has already reached the checkout counter at the grocery store, and with SNAP being unavailable in November due to Mike Johnson’s shutdown of the government in order to protect wealthy pedophiles … I feel for the clerks.

Here’s a little something from my ChatGPT logs. I know it’s just a machine, and that I’m seeing a statistical response, albeit one that is evolving due to emergent properties of large language models. But I feel weird if I don’t use at least minimal pls/thank you in interactions. I recognized the hazard and I burn some tokens daily trying to NOT train myself into being “that guy”.

So what happens when we combine artificial intelligence, artificial social stresses from a government that feels it no longer needs the consent of the governed, and a society awash with firearms? Oh … and Grok is explicitly being tuned for create an alternate reality bubble.

The AI Psychosis to mass murder pipeline is evolving, and here’s something that’ll keep you up at night. We’re all caught in this big emergent phenomenon of interconnected humans … and slaughter drives clicks.

Conclusion:

I’ve been on a journey. First the commercial chat bots, then locally on my Macs, then dusting off my old workstation. Now $750 of that angel money has gone into retiring a machine from the tweens and an equally elderly GPU. Now I’m sitting here being irritated by the brand new GPU sitting on my desk, and checking the progress of a 2020 vintage workstation as it crawls on hands and knees from somewhere in Texas to my hut.

Once it finally arrives, here’s some of the stuff happening.

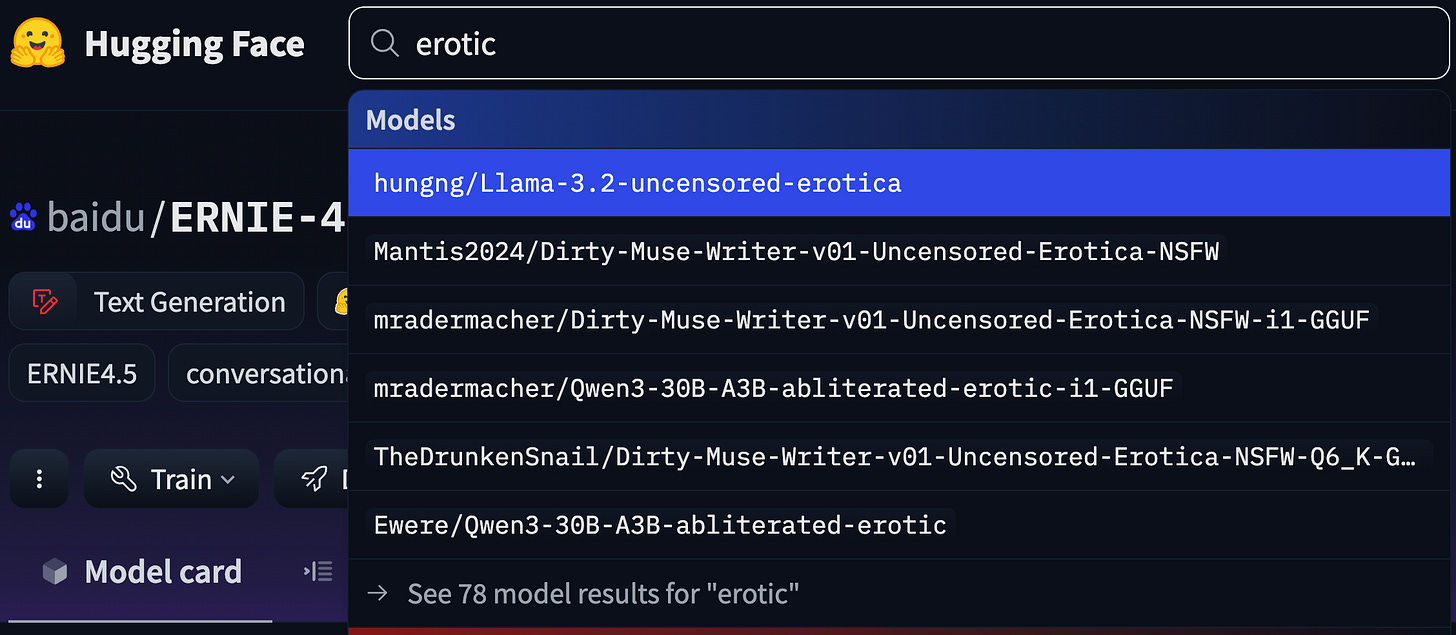

See that keyword “abliterated”?

If you’ve got a legal oriented MCP server, take Parabeagle for instance, and you want it to be able to have frank conversations on civil and criminal matters, that’s not gonna happen using commercial models. There are too many “guardrails”. Just try getting some answers on a divorce case involving allegations of child abuse. Your chatbot’s cybernanny subsystem will instantly get a bad case of the vapors. Abliteration takes a bulldozer to those guardrails, removing the entire notion of taboo topics.

And there are other use cases for models freed of constraints …

So here I sit, worried about the big picture harms to society’s guardrails, while I’m figuring out how to remove those guardrails from LLMs for commercial purposes. And I’m doing that removal … to deal with problems arising from people not respecting social norms and statutory boundaries.

Are you starting to get that weird falling dream feeling here?

I’ve been having it since I started working on Shall We Play A Game?, back in the summer of 2020. And it gets a little more intense with each passing day.

Shared!

Couldn't agree more. What if LLMs continuue reality TV's trend, normalizing toxicity even further?